- Blog

Fake Future #1: Deepfake videos

Oliver Kampmeier

Cybersecurity Content Specialist

We like to be on the cutting edge. Whether it is new methods of advertising fraud, bot traffic, or new technologies that have the potential to change the world.

One of these technologies are deepfakes. Mostly short videos that are displayed in the social media feed or forwarded by someone else – Yes mom, I saw Tom Cruise’s crazy channel on TikTok and I can’t believe it myself.

This article is part of a 3-part series on deepfakes, each covering a specific form of deepfake. With this series of articles, we want to take you on a journey into computer-generated imagery and video that is very crazy in parts and could have serious implications for humanity.

This article focuses on deepfake videos, what they are, how they are created and what impact this technology can have on society as a whole.

What are deepfakes?

Deepfakes use a form of artificial intelligence called deep learning to create fake images, audio or video – hence the name deepfake. Deep learning algorithms are able to teach themselves how to solve problems when given a large set of data.

Even in the unlikely case that you are not familiar with the name, there is a pretty good chance that you have already seen a deepfake video. The most popular videos include:

- Obama calling Donald Trump a dipshit

- President Nixon giving the “In Event of Moon Disaster” speech in which Neil Armstrong and Edwin Aldrin fail to return from their moon mission.

- Mark Zuckerberg raving about his world domination

How are deepfakes made?

Video and image manipulation have existed for several decades. It was in 2017 when a user named “deepfakes” posted manipulated adult movies on Reddit. The video swapped the face of the actresses with celebrities like Taylor Swift and Gal Gadot.

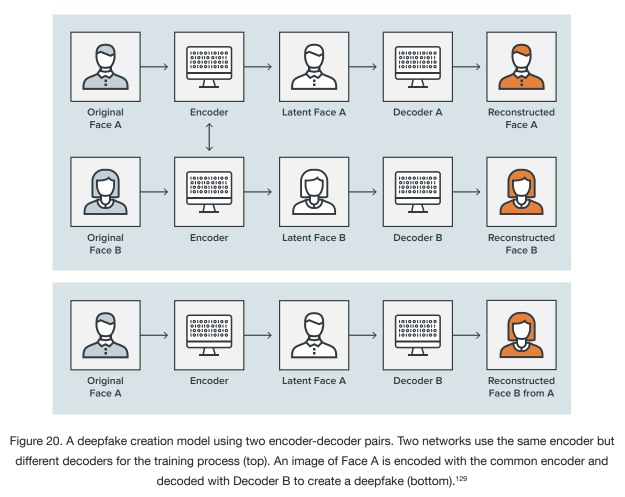

But how exactly do you swap faces of people in a video? There are several techniques using machine learning algorithms that can be used to accomplish the task. They are based on autoencoders and sometimes even on more sophisticated Generative Adversarial Networks (GANs). Both have in common that they must first be trained on a dataset in order to be able to replace a part of a person’s face.

To start, you need data – a lot of data. You need images of the face of the person you want to exchange with another. To improve the result, you require several thousand of these images in all scenarios such as light and dark exposure, frontal and side view, and also with different facial expressions. With celebrities, it is easier to realize because thousands of pictures of them are available on the Internet, various photo databases and through interviews.

Autoencoders

To keep it as simple as possible: Autoencoders are a family of self-supervised neural networks that learn how to replicate their input. They consist of 3 main components: an encoder, a code, and a decoder.

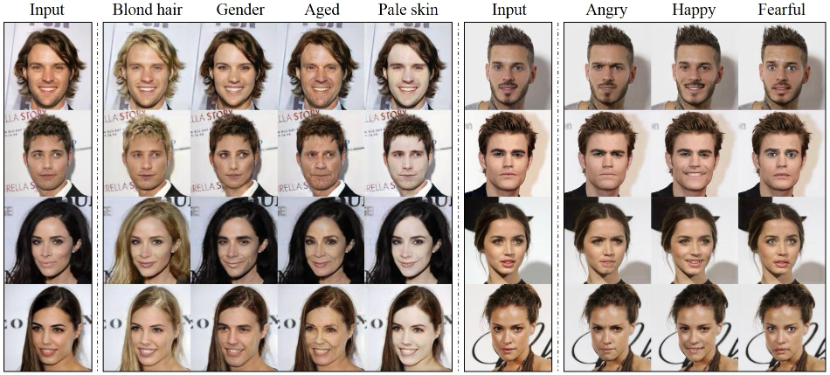

The encoder looks for similarities between the two faces you want to swap, reducing them to their common features. It compresses the input data and generates the code that the decoder uses in the further process.

After the encoder is done with its work, the decoder takes over. This algorithm is trained to recover faces from the compressed images generated by the encoder. You need to train two different decoders: one that recovers the face of the original person and another that recovers the face you want to replace.

All you need to do now is to feed the compressed images into the “wrong” decoder: The original person’s face is fed into the decoder trained on the person’s face you want to swap – the “fake”. The decoder is then able to reconstruct the “fake” face with the expressions and orientation of the original person’s face.

Generative Adversarial Networks (GANs)

The second method to create deepfakes is by using Generative Adversarial Networks. This approach lets two different neural networks compete against each other: the generator and the discriminator.

The generator autonomously learns to reproduce regularities and patterns in an input data set. This reproduced data is then sent to the discriminator for evaluation along with real data.

The discriminator looks at the data and tries to spot the reproduced “fake” data from the generator within the dataset and gives feedback on the performance. The purpose of the generator is to fool the discriminator so that it can’t longer distinguish between real and reproduced data.

The back and forth between the generator and the discriminator is repeated countless times, improving the result with each cycle until the generator produces realistic faces of non-existent people and thus successfully fools the discriminator.

Because of the increased effort required to train the models and the need for more computing resources, GANs are often used only to generate photos and not videos.

In the following video you can see how to create a complete deepfake video with the face of Tom Cruise:

Are deepfakes only about videos?

No. The deepfake technology can also create fictional photos and voice clones from scratch.

An example of fictitious photos would be the website this-person-does-not-exist.com, which shows real-looking photos of people who do not exist. The AI has been trained on thousands of profile images and knows how to draw realistic faces. These images are then used by companies on LinkedIn, for example, to create fake profiles to boost their sales reps and bypass algorithms that could limit visibility.

According to its latest transparency report, LinkedIn removed over 30 million fake accounts in 2021, some of them due to computer-generated profile images.

Another example of a deepfake audio was implemented in Disney’s series The Mandalorian. Using an application called Respeecher, the film team was able to fully clone the voice of young Luke Skywalker. The AI was trained using earlier radio broadcasts and interviews with the voice of Mark Hamill to achieve the desired results. The results were so good that they went unnoticed for over 9 months between the release of Season 2 and the accompanying documentary.

What technology do you need to create deepfake videos?

As difficult and complicated as creating a deepfake video may sound, in truth it is very simple. With the advancement of technology, computers are becoming faster and faster, so nowadays it is even possible to create such a video at home. All you need is a semi-powerful computer with a fast graphics card. For more demanding videos, there are also cloud-based data centers that provide you with more power.

You also do not have to develop the corresponding models and algorithms yourself, but can make use of freely available open source software:

All you need are basic programming skills to get the tools up and running and set the appropriate parameters.

But nowadays, you do not even need a computer. You can also create deepfake videos with your smartphone. There are some apps with which you can edit your face on memes and even social media videos:

What are deepfake videos used for?

As with so many technologies, it is not surprising that deepfake videos also found their initial distribution through the porn industry. Faces of female celebrities, including Emma Watson, Jennifer Lawrence and Daisy Ridley, were placed on actresses.

The next phase also involved women associated with the heads of state of various countries, such as Michelle Obama or Kate Middleton.

After nude celebrities, politicians were the next target group of fake videos. Probably the most famous video comes from Buzzfeed, in which President Barack Obama calls Donald Trump a dipshit. In another video, which admittedly is immediately recognizable as fake by the mouth and dubbing, Donald Trump makes fun of Belgium’s membership in the Paris Climate Agreement.

The latest political scandal involving deepfakes involved Berlin’s mayor Franziska Giffey, who apparently made a video call to a fake of Kiev’s mayor Vitali Klitschko.

But are all deepfake videos created for malicious purposes? Definitely not! Below are some use cases for deepfakes. Some are morally questionable like the ones mentioned above, others use the new technology for entertainment and even education.

Art

The Dali Museum in St. Petersburg, Florida, used deepfake technology to bring the “master of surrealism” back to life. Using over 6,000 images of him and training the modal for over 1,000 hours, the artificial intelligence learned in great detail what he looked like and how his mouth and eyes moved.

The result is 45 minutes of new footage divided into 125 short videos for visitors to watch. With 190,512 possible combinations, visitors’ experiences are practically unique. A built-in camera also makes selfies with Dali possible.

This is an excellent example of how AI, and deepfakes in particular, can provide people not only with entertainment and memorable experiences, but also how this technology can be used for an educational and meaningful purpose.

Film / Media industry

Most likely you have already seen a movie in which deepfake technology was used. The most common use of deepfakes is when the actors have already passed away. This is what happened with Carrie Fisher or Peter Cushing, who both made an appearance in “Star Wars: Rogue One”. There are also plans to resurrect James Dean for a new movie.

Another example of application in the film industry is the rejuvenation of actors. In “The Irishmen,” Robert De Niro, Al Pacino and Joe Pesci have received a facelift.

Are deepfakes even capable of completely replacing human actors in the future? Who knows, but most likely not completely. However, the example of the South Korean broadcaster MBN shows that it is certainly possible to completely replace a person.

Last year, news anchor Kim Joo-Ha was replaced by a deepfake of herself during a broadcast. MBN has already acknowledged that they plan to continue using the system for breaking news in the future.

Anonymity

To protect the identities of gay Chechens, whose sexual orientation can lead to torture and death in their home country, filmmakers used deepfakes in the 2020 HBO documentary “Welcome to Chechnya”. Instead of the traditional anonymous silhouette interviews, viewers get an intimate look into the lives of gay Chechens fleeing persecution. Without deepfake technology, such a documentary would have been less compelling and moving.

Extortion

Of course, such technology is already used by scammers to extort people. In one case, the scammers set up fake profiles on online dating sites and pretended to be women. They then sent friend requests to men and persuaded them to make a video call. During the conversation, a computer-generated video of a woman was used to trick the victim into masturbating. The scammers recorded everything, of course, and the victim received an extortion call afterwards.

If the victim refuses to pay, phase 2 starts. The scammers use a photo of the victim taken during the video call and produce a second deepfake video depicting the victim during a sexual act. So the fraud continues.

Fashion industry

Fashion companies are currently facing a major problem: new products have to be brought from the planning stage to the market as quickly as possible in order to satisfy fast fashion followers. The time alone to create photoshoots of the clothes can take a few weeks. Models need to be cast, stylists found, photographers hired and all the items of clothing physically present in one place for the shoot to take place.

But here, too, deepfakes could provide a solution. Looklet offers virtual models that can wear any clothing in various poses. All the manufacturer has to do now is photograph each item on a mannequin and upload the image. Regardless of the production location or other service providers. Manufacturers can choose from a variety of different models – including kids and plus-size models – and present their pieces on their website quickly and easily.

Potential threats of deepfake videos

Although deepfake technology has many legitimate use cases, there are also potential dangers that can come from it. Europol and UNICRI have identified several scenarios of possible future threats from deepfakes. These include:

- Disinformation campaigns

- Securities fraud

- Extortion

- Online crimes against children

- Obstruction of justice

- Cryptojacking

- Illicit markets

For more information and examples of each scenario, please read the full report here.

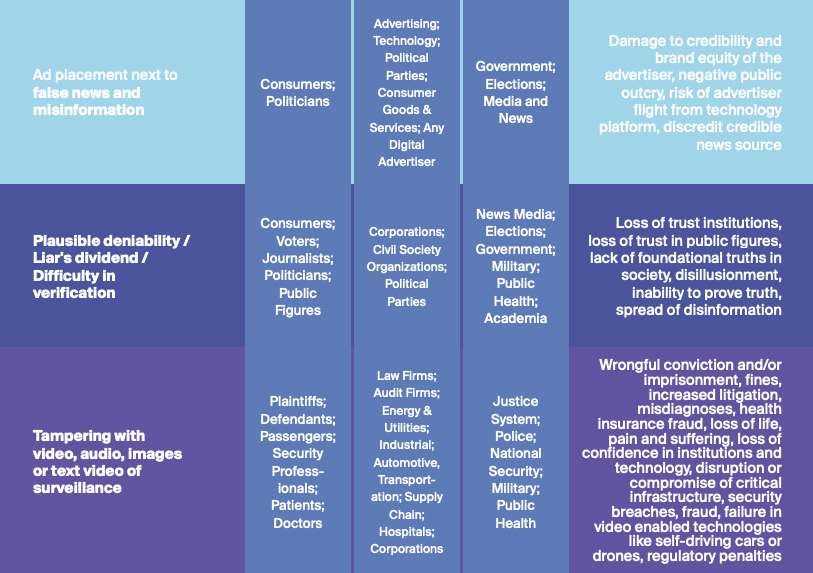

Another readworthy report from Deeptrust Alliance also lists potential threats to individuals and organizations. One identified threat revolves around ad placement alongside fake news.

See what’s hidden: from the quality of website traffic to the reality of ad placements. Insights drawn from billions of data points across our customer base in 2024.

How to spot a deepfake video?

As deepfake algorithms get better at creating realistic videos, it becomes harder and harder to separate them from real ones. Ironically, artificial intelligence could be the answer to detecting fake videos created by another AI.

AI can detect signs and inconsistencies that are not real in the video and could easily be missed by the human eye. A few years ago, an unusual blinking of the eyes was a good sign of a deepfake video. But as soon as the research paper was published, the algorithms were improved and were able to display the blinking realistically.

Look for the following signs when trying to identify whether a video is fake or not:

- Lighting is off

- Strange Shadows

- Shifts in skin color or unreal skin color

- Speaker inconsistencies

- Jerky movements

- Additional pixels

- Multiple light reflection in the eyes

Since a manual check would not only be very error-prone, but also impractical due to the large volume of deepfake videos, a technical and automated solution must be found. Some international companies, universities and governments have already held the Hackaton for Peace, Justice and Security in 2019 to solve this very problem.

Microsoft, Facebook and Amazon also held a joint Deepfake Detection Challenge to find a solution to automatically detect deepfake videos. Unfortunately, the results still left much to be desired. The best model only had a detection rate of 65% – too poor to be used productively at present.

But still, nowadays there are some tools and techniques that have embraced the problem and keep improving their models:

- You can scan questionable videos online with deepware.ai to find out if they are synthetically manipulated

- Microsoft is working to combat disinformation, but the tool is not currently available to the public

- Researchers are able to detect biological signals from the video and detect fake heartbeats

- By analyzing light reflections in the eyes, a tool can determine whether a video is a deepfake or not

- Another technique exploits the fact that visemes, which denote the dynamics of the mouth shape, sometimes differ from or are inconsistent with spoken phonemes.

- Operation Minerva is a system that can detect deepfake porn and automatically send a notification for deletion

- and many more

Currently, there is no technical solution to effectively and accurately detect whether a video is fake or not. As with so many other technologies, it will be a head-to-head race. On the one hand, there are developers who are training models to deliver as realistic results as possible and to outsmart detection mechanisms. On the other side are researchers, governments and large platforms that want to reliably detect fake videos and prevent their potentially dangerous distribution.

What does the law say about deepfake videos?

Creating deepfake videos is not illegal per se. However, there are some countries that actively combat the spread of deepfakes. China, for example, passed a law in early 2022 that prohibits large platforms like TikTok, which use large amounts of data to personalize their content, from showing users fake videos in their news feed. The regulations target the spread of false information that could lead to scams or social instability.

Another example is Texas and California. Both states have banned politically motivated deepfake videos within 30 days before an election. Additionally, similar to Virginia, California has also banned deepfake pornography.

But the biggest problem will probably be the lack of jurisdiction over extraterritorial originators of deepfakes. There can be as many laws as there are, if they do not apply to the creator of the videos because the person is located in another country.

“Therefore, injunctions against deepfakes may only be granted under few specific circumstances, including obscenity and copyright infringement.” (Source)

What is the future of deepfakes?

Much like Computer Generated Imagery (CGI) in the early 90s, deepfakes are a breakthrough technology, but with greater potential for abuse. Some might even say deepfakes will wreak havoc and undermine trust.

Many companies are already working on deepfake models that do not require a large amount of data as input, but can work with just a single image. Samsung, for example, has managed to bring the Mona Lisa to life with their technology.

Other companies, such as Synthesia, are working on a complete package. Artificial intelligence is used to generate videos complete with speakers and voice output from pure text documents. Customers can choose from a range of different speakers including different dialects or create themselves as a virtual avatar if required. After that, it only needs the text to be spoken in the video and the system will do the rest by itself.

It will also be interesting to see how various governments react to the potential threat and deal with the technology in the future.

Whatever the future holds for deepfakes, one thing is certain: Deepfake videos have come to stay.

- Published: July 1, 2022

- Updated: July 2, 2025

1%, 4%, 36%?