- Blog

ChatGPT – A new era of fraud

Oliver Kampmeier

Cybersecurity Content Specialist

Hardly any other topic has been as present in recent months as ChatGPT. An artificial intelligence (AI) that is able to communicate with a human via text, summarize information and texts, and even write its own texts on a topic.

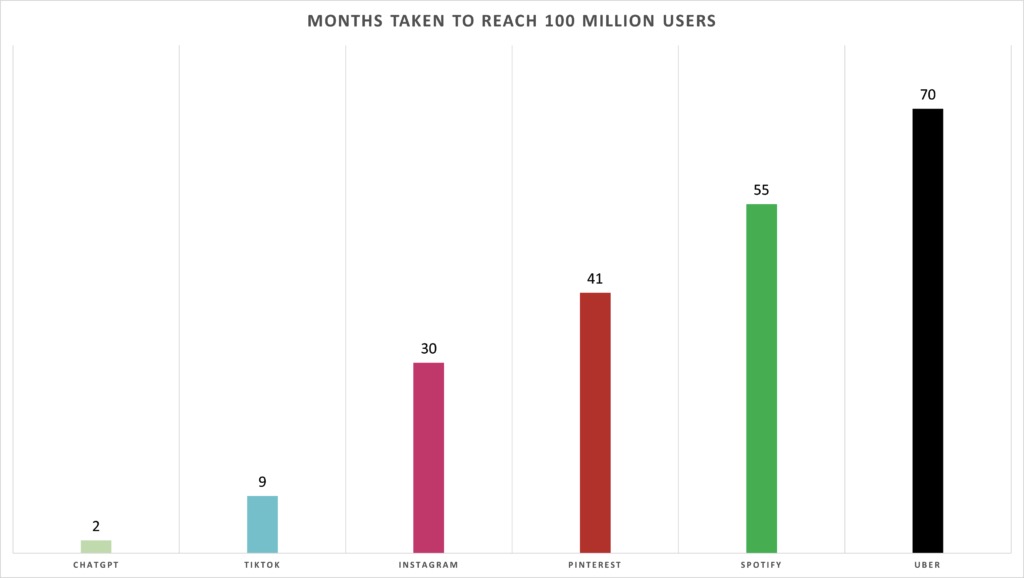

But ChatGPT is also interesting from a business perspective. No other product was able to crack the magic 100 million user mark so quickly. While it took Instagram 30 months to do so, it took TikTok only 9, and ChatGPT an incredible 2.

However, as good as it sounds to integrate ChatGPT into your daily workflow to save a lot of time in certain workflows, the consequences for this technology can be far-reaching if it falls into the wrong hands.

In the following article, let’s take a look at how ChatGPT can be used for (advertising) fraud. How is it already currently being used for malicious intent and what else can we expect in the future?

ChatGPT and fraud – the possibilities are manifold

We have already noted in several places that fraudsters are smart people. They are early adopters of the latest technologies and always find ways to use them for their fraud schemes. This is also the case with ChatGPT. Below is a partial list of ways ChatGPT is already being used by fraudsters today, just a few months after its official release.

Fake ChatGPT apps and websites

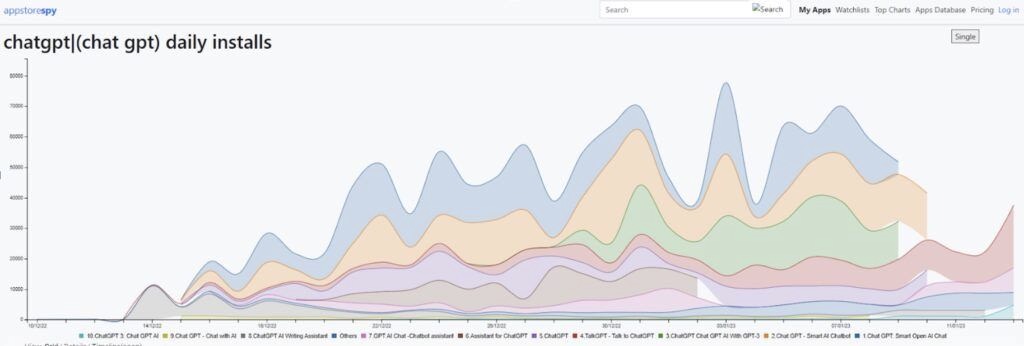

Even before OpenAI announced an API on March 1, there were already numerous apps in the app stores promising to use ChatGPT on smartphones. All of them have in common that they use “ChatGPT” in their name to profit from the hype and increased search queries.

However, many apps have only a simple chatbot integrated and constantly display hidden ads in the background. Other apps are used for various malware campaigns, infect users’ devices and steal personal information such as passwords and credit card data.

In addition to fake apps, there are also several fake websites that are an exact copy of the official ChatGPT website. These websites either promise visitors to download ChatGPT – the service is only available via browser – or fake the payment page where they can supposedly upgrade to ChatGPT Plus. In reality, however, users lose their money and data.

Terrorism, propaganda, and disinformation

ChatGPT excels at producing authentic-sounding texts quickly and on a large scale. This makes the model ideal for propaganda and disinformation campaigns, as it allows fraudsters to create and distribute messages that reflect a specific narrative with relatively little effort.

In addition, it is now possible for anyone to train a language model with their own data and sources for very little money. A language model trained with Fake News will always spread Fake News because it itself knows no other reality.

“Just as the Internet democratized information by allowing anyone to post claims online, ChatGPT further levels the playing field, ushering in a world where anyone with bad intentions has the power of an army of skilled writers to spread false stories.” (Source)

Coupled with the microtargeting capabilities of social networks, this makes for a highly explosive mix for the next elections in Western countries.

Social Engineering / Impersonation

Not only can language models such as ChatGPT produce authentic-looking texts, they can do so in a specific, predetermined language style. Europol even explicitly warns against this use in its paper “ChatGPT – The Impact of Large Language Models on Law Enforcement”:

“The ability of LLMs to recognize and mimic speech patterns not only facilitates phishing and online fraud, but can also be used more generally to mimic the speech style of specific individuals or groups. This capability can be widely abused to trick potential victims into placing their trust in the hands of criminal actors.” (Source)

Apart from phishing attacks, this capability can also be used in fake websites, for example. Until now, fraudsters usually created an exact copy of a company’s landing page, often using tools like HTTrack. However, with ChatGPT, they now have the ability to fake an entire website that could very well be the real thing, but is not.

Thus, fraudsters deceive both visitors and security systems, which in most cases only look for exact copies of the real site. These systems are simply fooled by the altered content, while at the same time maintaining the appearance of a genuine website to the visitors.

Phishing & Scam

Probably one of the most obvious uses of ChatGPT with regard to fraudsters are texts for phishing mails. Not only will the quality of phishing emails increase in the future due to perfectly written texts, fraudsters will be able to act much faster, more authentically and on a much larger scale with the help of ChatGPT.

“A single scammer, from their laptop anywhere in the world, can now run hundreds or thousands of scams in parallel, night and day, with marks all over the world, in every language under the sun.” (Source).

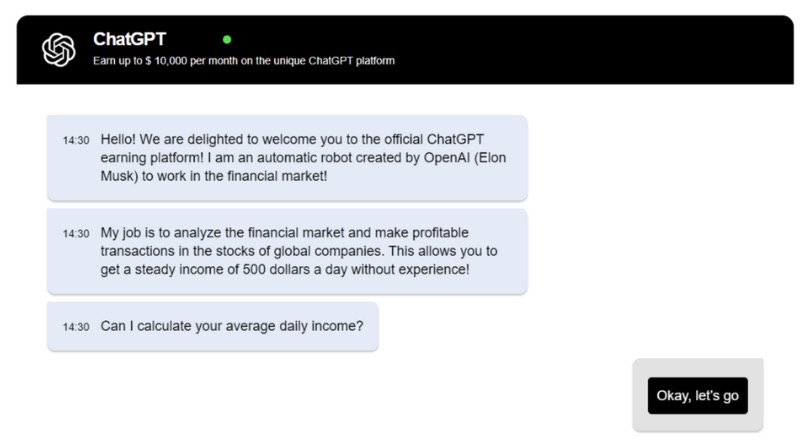

In early March, Bitdefender drew attention to a new phishing campaign that uses a fake ChatGPT platform to convince gullible investors to put their money into the platform and then profit from the payouts.

Another phishing campaign took advantage of the big tech layoff wave of 2022 to target desperate workers looking for a new job. The fraudsters pose as employers or recruiters and are very skilled at making their business profiles (e.g. LinkedIn) look legitimate.

Once they have a victim on the hook, after some time, they ask for a payment for the purchase of a laptop and their bank details so that a refund can supposedly take place. So the victim thinks they have found a new job and will receive a laptop along with a refund for it within the next few days. In reality, the fraudsters steal the money and the personal data (including ID and bank details) and then disappear forever.

Automatically generated malicious code

ChatGPT has been trained not only with billions of texts, but also with countless lines of code. Other AIs such as GitHub’s Copilot (or Copilot X, which incorporates GPT-4) have been trained using only code.

While these tools can save developers a lot of time in their daily work, they also create a completely new and huge problem: they allow inexperienced developers to create complex malware with simple text instructions.

“Shortly after the public release of ChatGPT, a Check Point Research blog post of December 2022 demonstrated how ChatGPT can be used to create a full infection flow, from spear-phishing to running a reverse shell that accepts commands in English.” (Source)

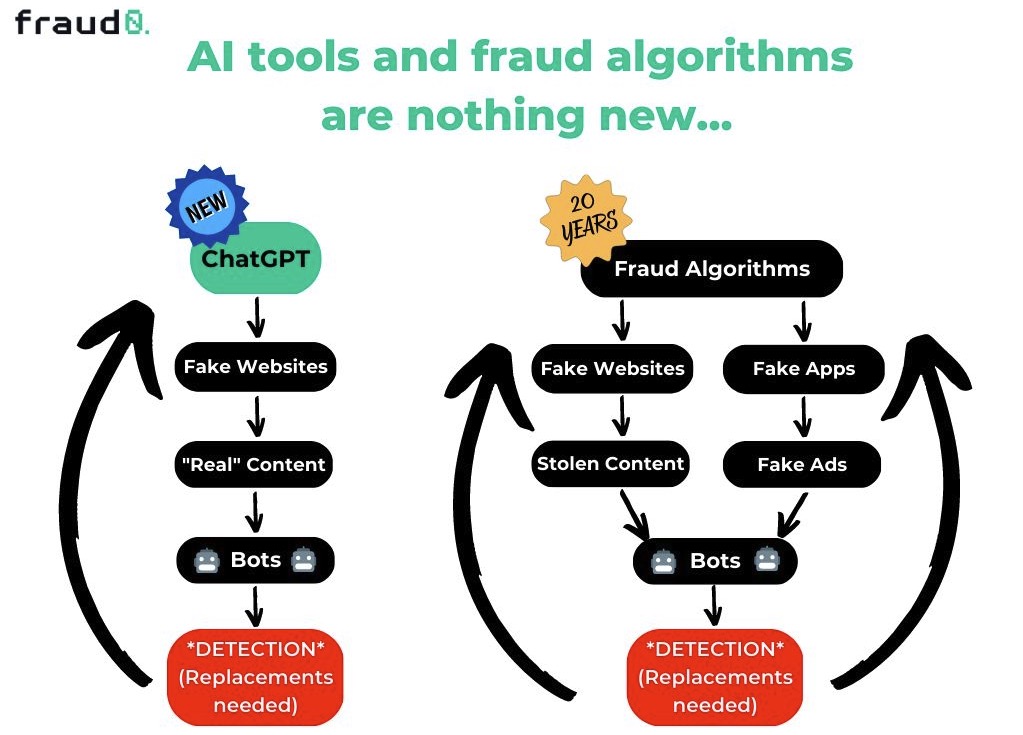

Advertising fraud with fully automated fake websites

If you combine all the potentially damaging use cases of ChatGPT, one thing quickly becomes clear: It has never been easier for fraudsters to fully automate both the technical foundation, the content, and the “marketing” for a fake website or app.

The clever part is that these fakes don’t have to be a copy of an existing website or app at all, but can offer unique content through a combination of different AIs. The linguistically perfect texts for the website come from ChatGPT, the images from Midjourney or Dall-E, and the banner ads to attract visitors to the site can be created using tools like AdCreative.

De facto fraudsters have been using various tools to create fake websites with plagiarized content for several years. But while in the past so-called “article spinner” tools were used, which simply replaced individual words with synonyms in a predefined text. Now, with tools like ChatGPT and image generators like Midjourney, fraudsters have the ability to create unique content that is almost indistinguishable from real.

It is not surprising, that there are already countless instructions on the Internet on how to automatically create a website in combination with ChatGPT.

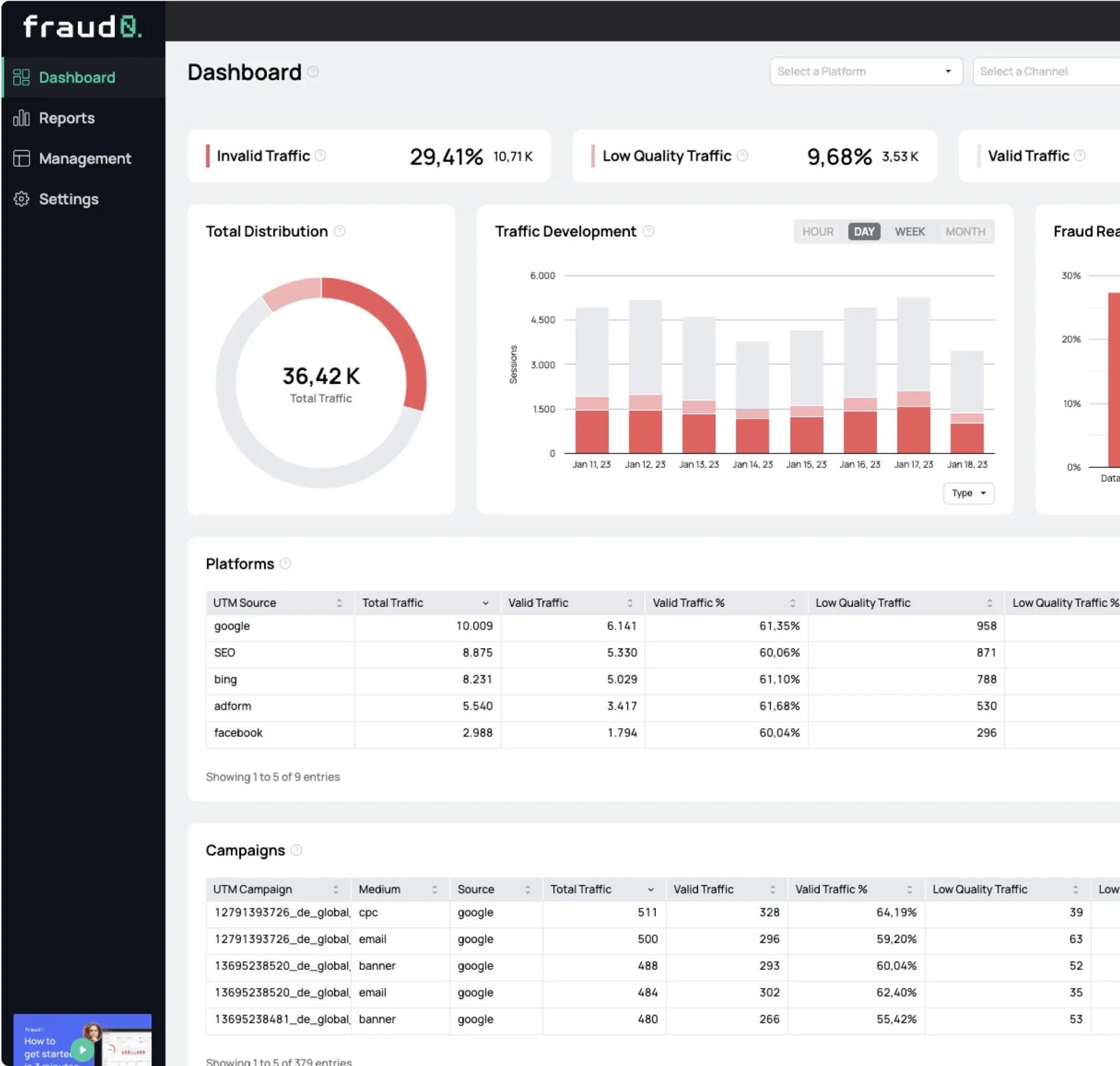

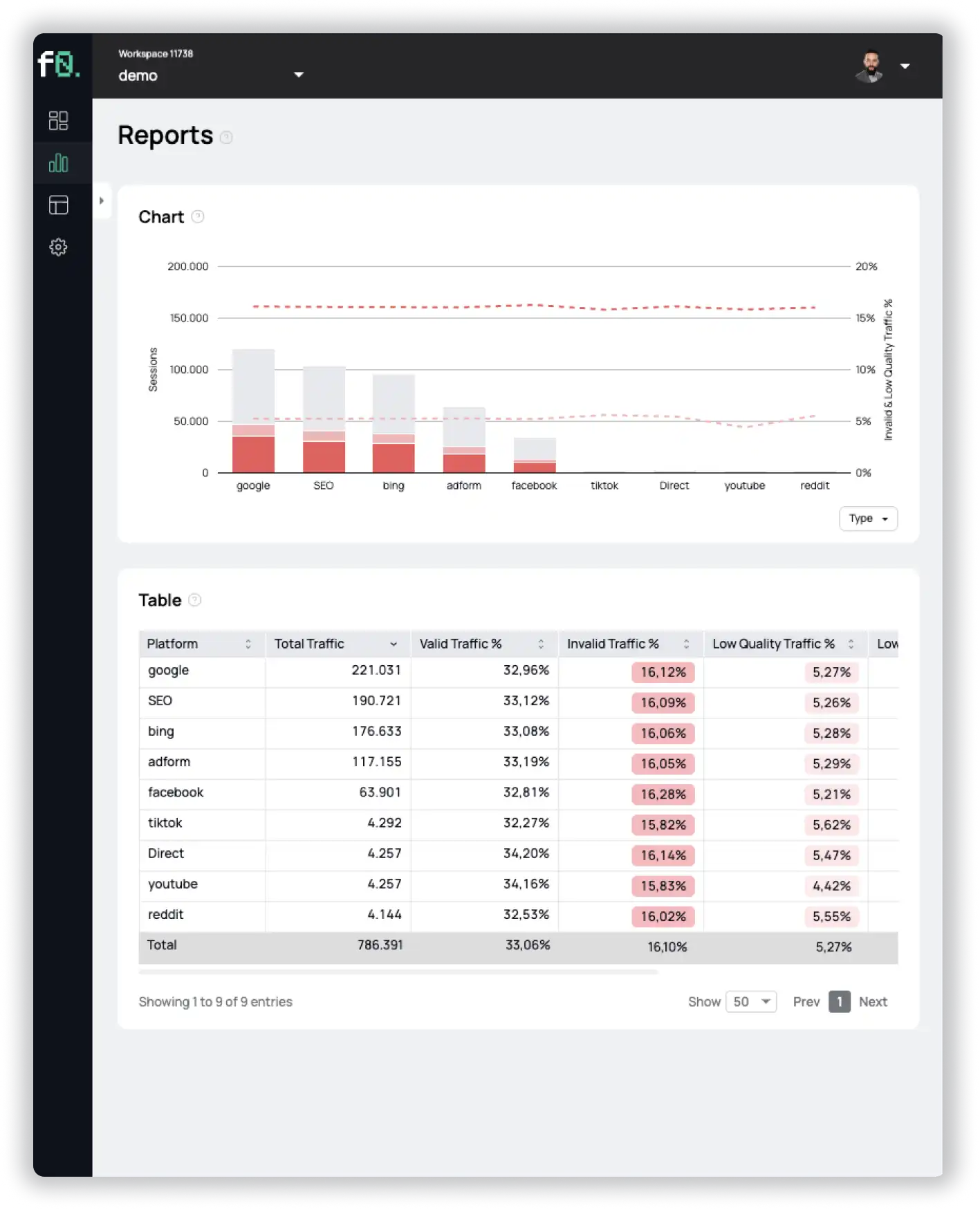

Technically inexperienced fraudsters can thus create fake sites even more easily, which they equip with advertising placements. The ad placements are then primarily purchased from advertisers via so-called open auction inventories.

The problem with this is that these fake websites are often never seen by real people, but are monetized with bot-generated traffic. Advertisers thus pay for advertising to bots on websites automatically generated by AI.

Our prediction: ChatGPT will make life much easier for fraudsters

After we have shown a variety of different possible applications for ChatGPT in terms of (advertising) fraudsters, we would like to conclude with a small prediction.

We think that AIs like ChatGPT will make life much easier for fraudsters. It has never been easier to automatically create texts, images and code and to combine the individual components.

Specifically, we assume the following things:

- More propaganda and disinformation campaigns

The statements of artificial intelligence like ChatGPT could be used as a benchmark for truth. After all, if a machine that is based on billions of texts says something, it must be true. Especially in times of an election campaign, this can cause enormous damage and loss of trust. - Better phishing campaigns

The quality in terms of spelling and grammar of phishing emails will improve a lot very quickly. This will make it increasingly difficult to distinguish scam from genuine mails. Email communication between fraudsters and victims could also be automated in the future. This will enormously reduce the amount of time the fraudsters need and they can focus on creating even more and even better phishing mails. - More fake websites

We also assume a massive increase in fake websites, which are misused for advertising fraud. These sites appear in so-called DSPs (demand side platforms) and are primarily used to deliver advertising to artificial, bot-generated traffic. The reason for the increase: on the one hand, the entry barrier for technically inexperienced fraudsters has been extremely lowered, and on the other hand, experienced fraudsters now have a way to make websites look even more real. Also, we expect that such fake websites will find themselves more and more in the organic search results. An essential factor of ChatGPT is that although the texts are based on already existing content, the generated texts do not represent “duplicate content” in the classical sense, as they are not a 1:1 copy. Thus, it becomes easy for websites that rely purely on AI-generated content to achieve organic rankings in search engines. Unfortunately, a reliable detection of whether texts were created with the help of an AI is still missing today.

We hope we were able to give you a good first insight into the potential of ChatGPT in connection with (advertising) fraud with our article and raise your awareness a bit for the upcoming future.

To continue protecting your ad budget from bots and fake websites, sign up for a free 7-day trial of fraud0.

- Published: April 12, 2023

- Updated: July 2, 2025

See what’s hidden: from the quality of website traffic to the reality of ad placements. Insights drawn from billions of data points across our customer base in 2024.

1%, 4%, 36%?