- Blog

Sam Altman: “Platforms Feel Fake.” And the Bot Internet Proves It

Cybersecurity Content Specialist

There’s a fringe idea floating around called the Dead Internet Theory: that most of what we see online is no longer human. That content, traffic, and interactions are dominated by bots, AI agents, and automation. It always sounded like a dystopian conspiracy. A few years ago, you might have laughed it off. But now? Things are changing fast.

OpenAI CEO Sam Altman recently put it in blunt terms: “Platforms like X and Reddit feel very fake.”

He added that a year or two ago it wasn’t like this. That’s how quickly the shift is happening.

Ghosts in the Machine

Bots have quietly become the hidden majority of the web. Cloudflare Radar reports that around 30% of all global traffic is already automated — crawlers, scrapers, AI bots. And in some regions, bot activity has even surpassed human traffic.

So the “dead internet” idea isn’t so far-fetched after all. What we’re scrolling through every day is already shaped as much by machines as by people. Analytics, ad impressions, page view, they all look less reliable once you realize that so much of it comes from non-humans.

The Rise of AI Scrapers and Agents

If yesterday’s internet was distorted by simple bots, today we’re already facing two new layers. First are the LLM scrapers: bots that crawl enormous amounts of online content to feed large language models. Unlike traditional crawlers, they don’t just index pages. They pull in full articles, posts, images, or code. Cloudflare has pointed to a growing “crawl-to-referral gap,” where content is heavily consumed by these bots but sends back almost no human traffic in return.

On top of that, AI agents have already arrived. They don’t just collect information, they act. Agents can browse, click, interact, and even post content that looks convincingly human. Instead of passively training models in the background, they’re actively shaping the online environment in real time. For a deeper dive into how they’re reshaping the marketing world, check out our blog post on Marketing in the AI Era.

And the pace of change is dramatic. Just a year or two ago, these problems felt niche. Now, as Sam Altman put it, platforms like Reddit and X feel “very fake” — a shift that has happened almost overnight.

Why It Matters

When bots and AI agents dominate, the fundamentals of the web start to wobble. Metrics that businesses depend on, from engagement to conversions, lose their meaning. Publishers see traffic counts go up, but with fewer real people behind them. Advertisers spend money on impressions that may not be human at all. And the everyday experience of the internet, once built on authentic interaction, risks turning into a simulation where it’s impossible to tell who or what you’re engaging with.

The problem isn’t that bots exist, they always have. It’s the scale, speed, and sophistication of today’s automated traffic that’s new. And unlike earlier generations of the web, we’re no longer talking about background noise. Bots and AI crawlers are now one of the loudest signals online.

What Comes Next

The web isn’t “dead” yet, but its identity is under strain. If current trends continue, human activity will increasingly be the minority signal in a flood of automated noise. Cloudflare and others are already experimenting with solutions, from blocking AI crawlers by default to exploring “pay per crawl” models, but it’s clear this battle is only beginning.

For businesses, publishers, and advertisers, the lesson is simple: don’t assume the numbers are real. Protecting KPIs means acknowledging that a large part of the internet no longer comes from humans and that the line between organic growth and automated distortion is fading fast.

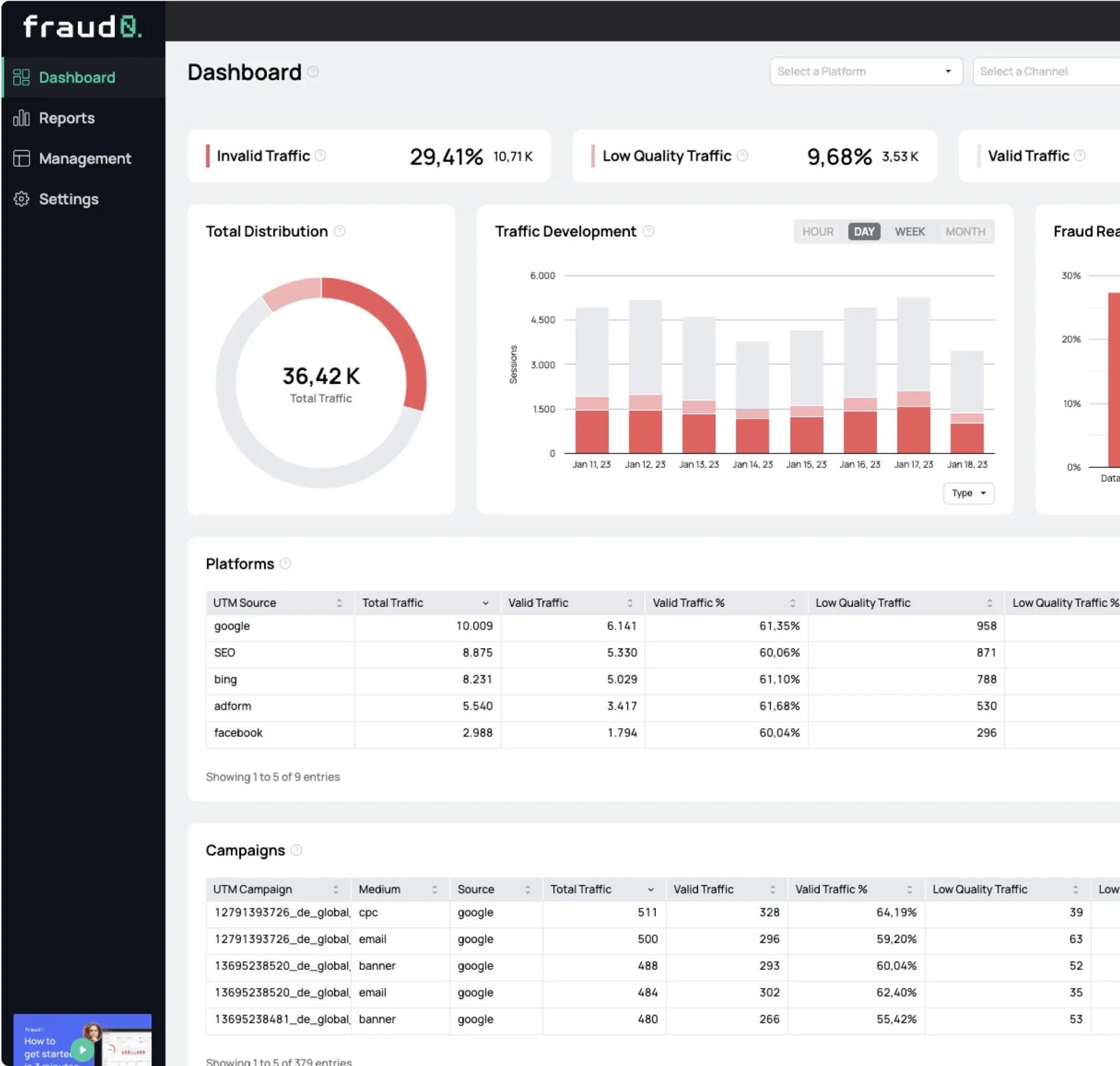

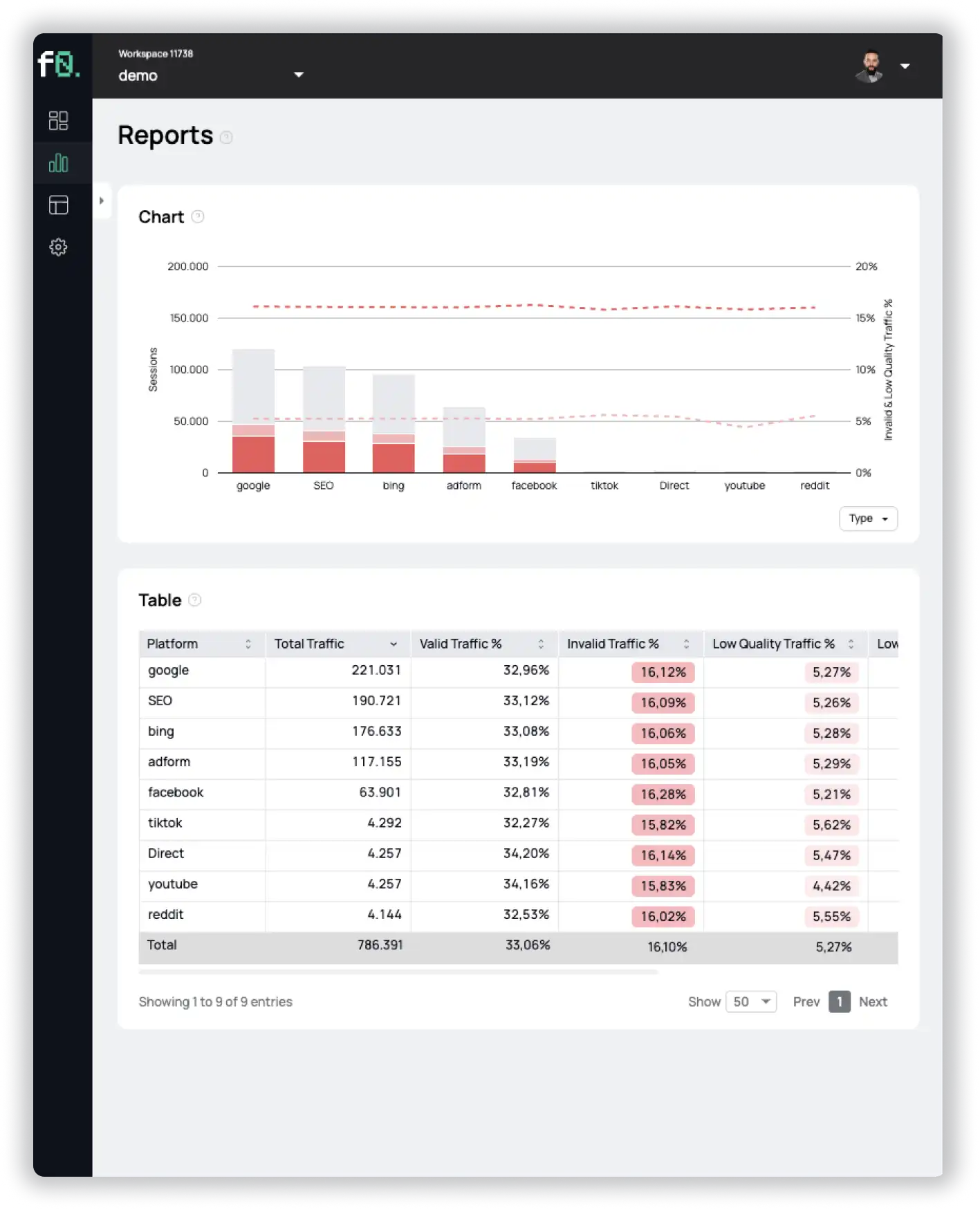

With tools like fraud0, it’s possible to get clarity on what kind of traffic actually reaches your site, whether it’s humans or bots, and to better understand how automation is shaping your data.

See what’s hidden: from the quality of website traffic to the reality of ad placements. Insights drawn from billions of data points across our customer base in 2024.

- Published: September 15, 2025

- Updated: September 15, 2025

1%, 4%, 36%?